Meta Learning in Internet of Things

Courses Overview

Learning on the Internet of things bears some particularities, which requires a certain evolution of paradigm. In this course, you will discover some approaches that take into account these particularities in the learning pipeline. Most of these developments are best described in the meta-learning paradigm.

- Lecture 1 (week 1) : Introduction to the internet of things (signal acquisition, from signal to data, data transmission, data transformations)

- Lecture 2 (week 2): Introduction to Machine Learning through IoT: Federated learning, Metalearning, …

- Lectures 3 to 5: ML for IoT from Exam Monitoring System project.

- Evaluation (week 6): Selected papers presentation

- Lectures 7-9 (weeks 7 to 9): ML for IoT from Exam Monitoring System project.

- Evaluation (week 10): see the project description for the several deadlines and deliverables.

ExamMonitoringSystem

Welcome to the main page of the course ML-IoT Edition 2023.

- PI: Aomar Osmani

- TA: @HamidiMassinissa

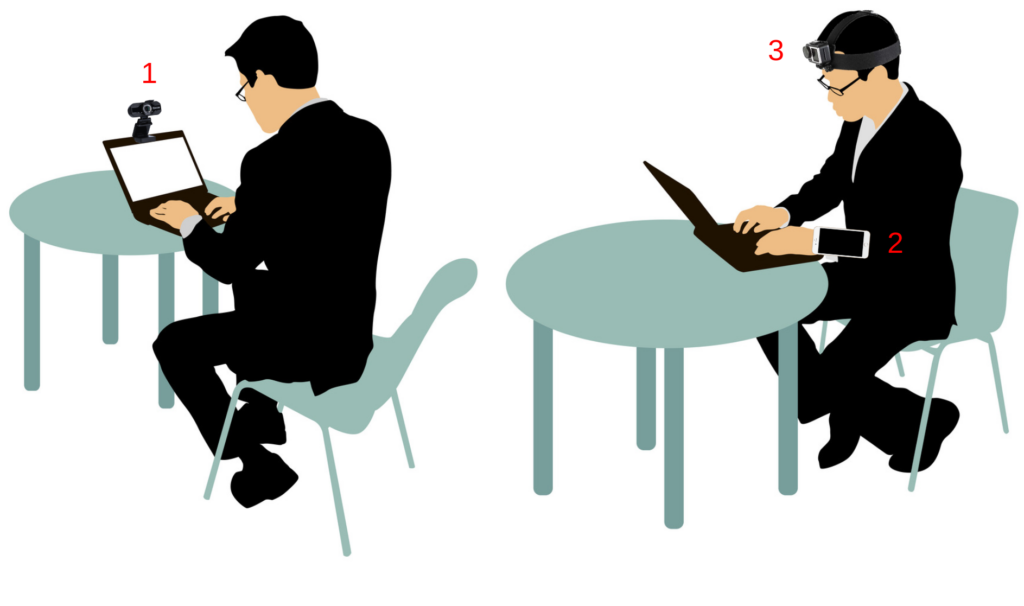

These data sources are able to generate data of different modalities (sound, video, acceleration, gravity, etc.) and the idea is to build machine learning models that can learn how to interpret and fuse these data in order to detect any suspecious behavior.

To make things simple, we will consider smartphones (and the sensors they embed) to be the data sources from which we want to learn.

More generally, each group can propose its own AI solution to this problem, including the use of GPT and its derivations or any available solution. The evaluation will be based on the quality of the results, the choice of architectures, the quality of the modelling, the relevance of the proposed pipeline and the level of assimilation of the proposed solutions. Obviously, solutions for which your contributions are close to zero are excluded.

It is fundamental, under penalty of nullity and sanctions, to cite in a clear manner and with access allowing verifications any use of tools, codes, articles, contributions of others, even weak ones in your projects. Any omission will be considered voluntary.

It is not forbidden to use the work and ideas of others, it is imperative to indicate this and explain how it is integrated into your work.

Machine Learning

Computer vision

Various models are available online and can be used to build the exam monitoring system. For example, this LINK is a machine learning model able to detect face orientation. This model could be useful in order to verify if the student is looking elsewhere instead of his/her screen for long periods of time.

Human activity recognition

You can leverage the available sensors provided by your smartphones, such as acclerometer, magnetometer, gyroscope, etc. in order to have a clear understanding of the current situation. Indeed, the computer vision module can be bypassed using simple adversarial strategies. The additional modalities provided to recognize the current activity (or the movements) can help the system to be more robust.

Mobile application

In Figure 2, you can see an example of a mobile application that can be used to help set up the entire exam monitoring system. You have basically the choice between keeping all (or parts of) the data locally or transferring the generated data into a central server. In the former case, light-weight machine learning models have to be developped using appropriate frameworks (e.g., TensorFlow Light or TensorFlow Federated) and deployed into the mobile application. In the latter case, the mobile application will serve only to collect data and transmit to the server.

Schedule and delivrables

- Define the system components and the various scenarios you want to address;

- Setup the different components so that they can generate data

NB you are free to use whatever technology to achieved the abovementioned goals. The most important thing is to get data from the smartphones. In particular, you can tackle the different components individually.

Delivrable (last deadline 28/02/2023 )

- The specifications (scenarios, sensor deployment, high-level algorithm to detect cheating attempts) and conceptual model (UML, E/R, etc.) of your exam monitoring system

- Source code for data generation from the different components (commit with message “[delivrable 1] System setup”)

- Collect and analyze the data you receive from the different components;

- If any perform some pre-processings on the collected data;

- Train and evaluate simple machine learning models on the collected data. No need to have extraordinary results at this step. The idea here is to get the learning pipeline set and correctly functionning.

- Source code of your learning pipeline (commit with the message “[delivrable 2] Learning pipeline setup”)

- Notebooks showing the pre-processings and any other action you performed on the data. Please, keep the notebooks clean, i.e., put any boilerplate in utility files (utils.py)

- Explore the literature for ideas and available models

- Feel free to experiment with different learning models

- Perform for example hyperparameter optimization, transfer learning, gradient-based meta-initialization, etc.

- Do not forget to document every experiment you perform : draw figures of your models, loss evolution w.r.t. training epochs, hyperparameter importance, etc.

- Notebooks

- Papers

- Models that you used or draw inspiration from

Project submission (deadline 28/02/2023 V1, 10/03/2023 final V.)

Evaluation

The projects will be evaluated on different criteria:

- novelty of the approach;

- novelty of the models;

- accuracy of the learned models;

- complexity of the solution.

Resources/indications

Mobile application to collect motion data from smartphones:

Here is a good starting point to build the mobile application: http://www.shl-dataset.org/app/. It is a mobile application conceived by the Sussex university. Its source code is available here.

More generally, you need to find/developp an application displaying the dashboard streaming sensors from phone to pc. You can use applications like :

– IP webcam which allows you to use your phone as an IP camera. It will take the video from the photo sensor (front or rear) of your device and then send it in a network stream.

– Ipcam for Apple phones to use your Mobile as wifi IP camera

–

Defining scenarios of potential cheat attempts:

You are asked to define a set of scenarios of potential cheat attempts. The idea is to use these scenarios to build a dataset which can then be used to validate the final system.

Proposed format to store your dataset:

You can run the different scenarios you defined above for many times and with different users to enrich the dataset. We propose the following format to store the collected data (this format is in the form of a filesystem hierarchy):

/root/userid/recordid/front_camera.mp4

station_camera.mp4

hand_motion.txt

label.txt